Our client was a state health agency that had recently been mandated to improve nursing home transparency. The agency was required to create a platform for residents to search, compare, and evaluate nursing homes. The agency also decided to use this opportunity to create an internal tool to monitor underperforming nursing homes.

This project was interesting because I was a UX designer, data analyst, and data engineer. The tools we built would later be used by 5,000+ people. I learned how to user test, rapidly iterate, and design with empathy for users.

I worked on a team with one other designer and one Tableau developer. The Tableau developer and I worked on the internal tool, while the other designer worked on the public dashboard. I owned designs and conducted testing and research.

Problem statement

Constraints

The tool had to be developed using Tableau. Also, different user personas had different levels of familiarity with the technical terms in the data.

Outcome

The internal dashboard has been used to improve safety standards at 15+ problematic nursing homes. The public dashboard has been used by 5,000+ people since inception.

Time

Six months

Team

2 designers

1 Tableau developer

My role

UX design

data engineering

product design

To begin, we scanned through the state legislature to scope out the project. A bill that had been passed outlined requirements of the external dashboard.

Early on, we stressed the importance of speaking with the users to identify their pain points. We expanded our interview group to include personas we hadn’t initially considered, such as advocates and public health officials.

“What is something you want to do in one year that we can help you do today?”

We documented all our interviews and consolidated them in a Miro board. Based on our feedback, I interpreted the problem statement as "We want a way to see how nursing homes across the state are trending, identify outliers, and drill down into underperforming nursing homes."

I roughed out the average user journey through the tool. To simplify it to the layperson, the idea had to fit on the back of a napkin.

Was the internal tool going to be structured like DoorDash? Or like a news website? To identify the information architecture, I drew out the average user journey and indexed on the call-to-action or the So what? on every page.

Once I drew out the user journey, I realized that it was important for the client to get a summary of how the state was doing (Overview). Then they wanted a way to compare different facilities against each other (LTC scorecard). And lastly, they wanted to drill into a particular facility if they wanted to get more information.

Through this exercise, we realized there were two levels of data granularity — one at the state-level and at the facility-level.

From here, I created wireframes and tested the navigation structure with our clients.

Next, we turned to Figma to create rapid mockups based on our information architecture. The visual design of the tool changed drastically between design critiques and testing sessions.

Since this was one of my earlier projects, I learned the importance of font hierarchy and using color sparingly. At one point, I turned to mocking designs in black-and-white to focus on the message, not the UI.

Throughout this project, we stressed on prioritizing what metrics to show before we prioritized how to show them. I learned that users are less excited about what chart type you use and typically more interested in what metric you highlight, what tab it's under, and whether it's been labeled correctly.

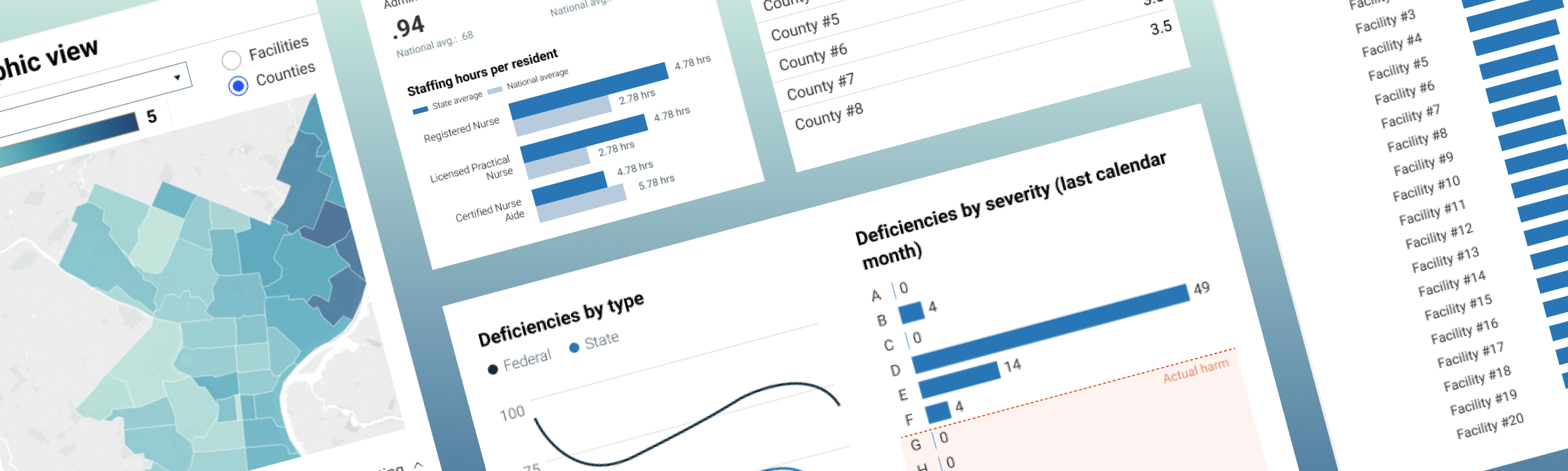

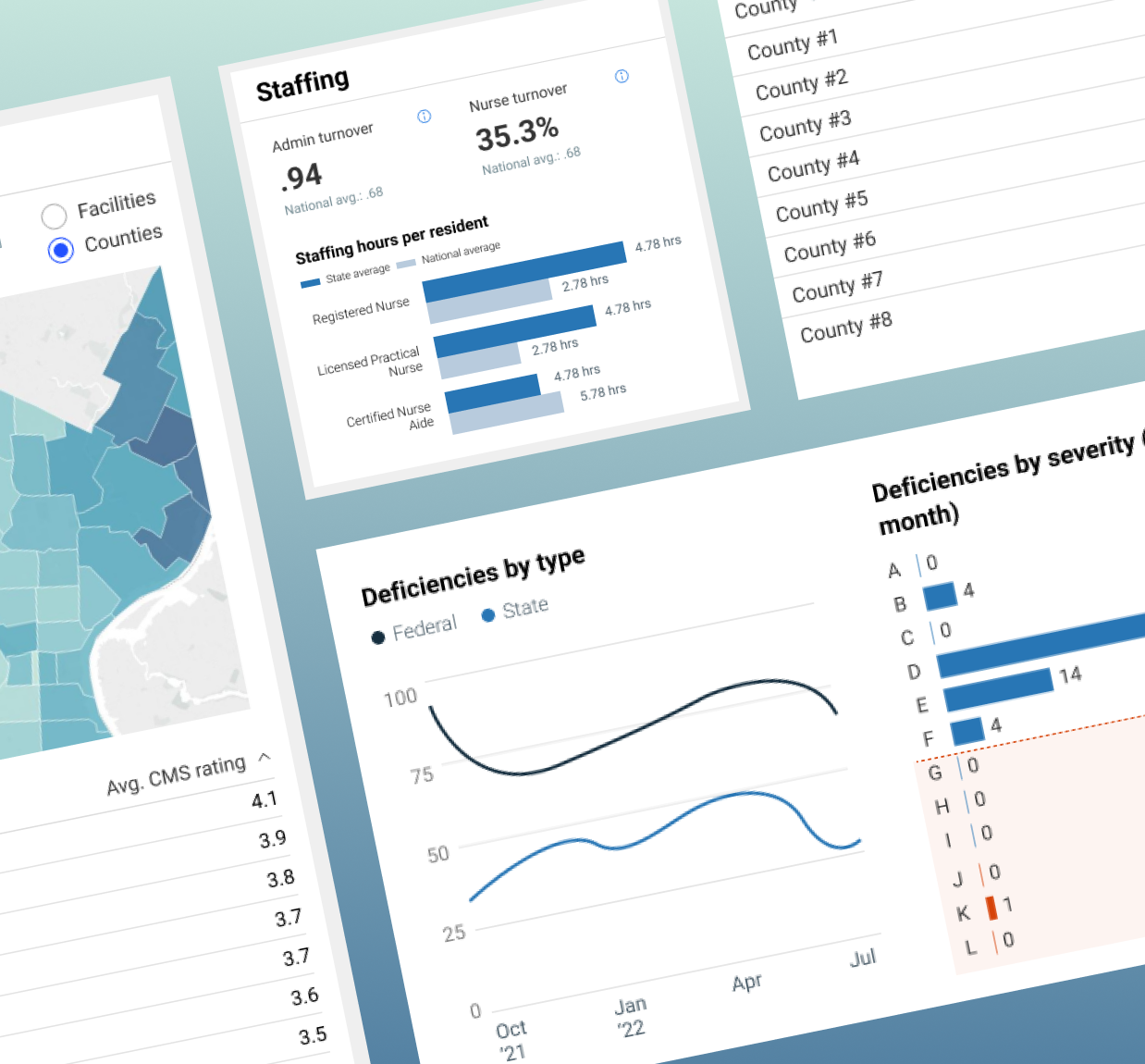

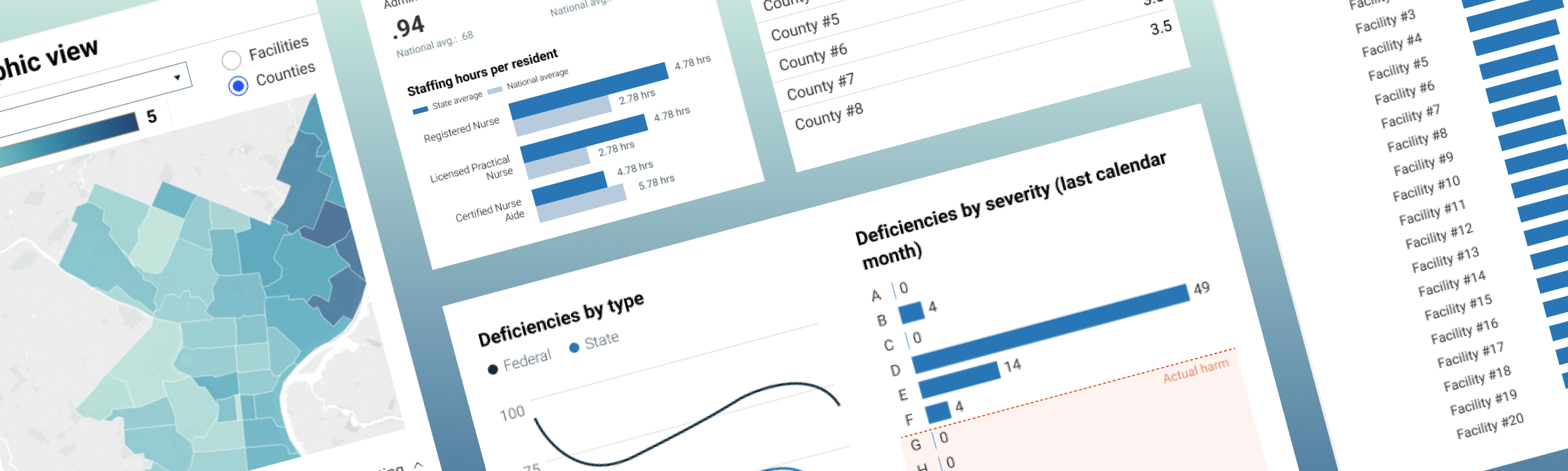

For example, in our early wireframes, we didn't include a map of the state on the Overview page. But during the user research phase, we heard many people stress the importance of finding "clusters" of poor performance, or COVID-19 "outbreak areas." When we mocked up a map, our users were mildly interested but didn't see the value just yet. After some iterating and tweaking, we prototyped a map with the ability to toggle between counties and facilities. This excited our users and they said it would open perspectives they'd never had before.

Throughout the above processes, we conducted several rounds of user testing. There were two takeaways that I now apply to all projects.

First, there is one group of stakeholders that is typically overlooked in product design:

Developers are also stakeholders. When user testing, I would share designs with developers to get their feedback on what was feasible and if the data could support our designs. This meant that we would catch issues early on and we wouldn't have to redo our prototypes.

Prototype with real data. Despite starting testing sessions with “this is all fake data,” our client would try to find insights from lorem ipsum, or question how there were suddenly 123,456 facilities in the state. Instead, when we prototyped with real data, the users were able to better put themselves in the driver's seat and testing became more fruitful.

State legislature required that the public tool had to be updated at least quarterly. After combing through the data, we learned that nursing homes frequently change their names. This meant the tool had to be updated more frequently. We settled for monthly updates, given the frequency of some data points.

I worked with the Tableau developer and the client’s IT team to develop a data pipeline to extract, transform, and load (ETL) data to Amazon S3. I wrote scripts to consolidate files into three tables, ready for ingestion into Athena and Tableau.

Outputs from some Python files were used in others and files were updated at different frequencies.

Once development was close to the finish line, we set up biweekly calls with the clients to walk them through how to update the dashboards.

Just after our team wrapped up development, we asked the client to take the lead on updating the dashboards. We said we’d be there in the background if they needed support, but they were on their own. This made them self-reliant and — when the pipeline initially broke — the clients were better equipped to deal with it themselves.

The developer and I also left about 18 pages of extensive documentation with an FAQs section.

On a closing note, this was one of my most fulfilling projects. I contributed to a tool that 5,000+ people used to search for a nursing home for their loved ones or for themselves. I learned how to user test, rapidly iterate, and design with empathy for the user.

Here are some screenshots of the internal tool that I worked on. All data is fake.

If you liked this article, please reach out! I’m a data visualization designer with experience in UX design, data engineering, and analytics. Shoot me an email!